But what if your eyes tell a different story?

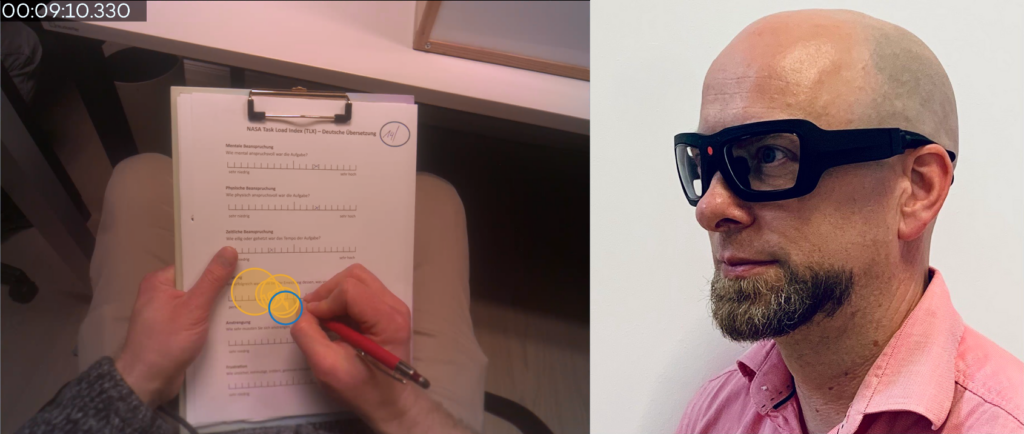

A recent study, using the VPS Smart Glasses with integrated eye tracking, and machine learning, set out to understand how well our eye movements reflect our actual attention and whether we can truly assess our own mental workload. The results were clear: eye tracking can objectively detect attention performance, and is more reliable than self-assessment.

Participants wore smart glasses while performing a task designed to measure attention and concentration. Afterward, they rated how demanding the task felt using a standard self-evaluation tool (NASA-TLX). At the same time, the smart glsses tracked subtle eye behaviors such as:

- How long someone focused on a specific point

- How often their gaze wandered

- How frequently they blinked

The real insight came when this data was analyzed using machine learning, a way of training a computer to recognize patterns. The model learned to spot which eye movement behaviors correlated with high, average, or low attention levels.

The results were very insightful:

- A specific model, called a Multi-Layer Perceptron (MLP), could predict a person’s attention level with more than 80% accuracy

- Meanwhile, the participants’ self-assessments did not line up with their actual performance. People could not reliably judge how well they were concentrating.

This points to a deeper insight: we often assume that putting in more mental effort automatically means we’re performing better. But that’s not always the case. The study suggests that performance might depend more on how efficiently we manage our attention, not just how hard we try. These subtle patterns of attention, often invisible to us, can be revealed through eye tracking.

Many high-pressure roles rely on people staying sharp. But if someone is used to operating under stress or fatigue, they might appear fine and even feel fine, while their cognitive load is actually higher than perceived. This study showed that objective eye tracking data can detect these shifts in attention.

What is also exciting is that the test used mobile smart glasses (not a lab setup), which made the approach highly practical.

Think:

- Pilots and drivers being monitored for fatigue

- Surgeons or emergency workers receiving cognitive load insights in real-time

- Personalized learning, training, or therapy adapted to actual focus levels

and so much more.

The study shows that:

- Eye tracking can reliably classify attention performance

- Self-assessed mental workload does not tell the full story

- Machine learning makes it possible to do this accurately and automatically

This combination of objective data and intelligent analysis opens the door to safer, more efficient, and more personalized systems, from smart vehicles to workplace safety tools.

And it starts with something as simple as where you are looking and for how long.

Study methodology:

- Participants: 20 individuals, ages 20-39

- Duration: The study ran over four months; each session took around 10-15 minutes per person

- Device: VPS19 smart glasses (mobile eye tracker)

- Data Collected: fixation count, saccade count, gaze velocity, fixation duration, blink rate and area-of-interest attention time

- Tasks: Visual attention test (d2-R) +

NASA-TLX self-assessment

- Analysis Method: Machine learning models trained on eye-tracking data

- Study conducted by: Adrian Vulpe-Grigorasi, Zvetelina Kren, Djordje Slijepcevic, Roman Schmied and Vanessa Leung

- Partner institutions: St. Pölten University of Applied Sciences and Viewpointsystem GmbH

- Read the full article here: https://doi.org/10.1109/Informatics62280.2024.10900810