One of the major future challenges with augmented and mixed reality is: you are offered nearly unlimited data, but how do you let your machine know which data you want and need in a certain situation? And how could the machine soon know this all by itself?

Many demo videos of AR/MR glasses or, more recently, smart lenses show a seamless user experience, providing relevant overlays exactly at the right moment and at the right angle, apparently without the user having to do anything. But let’s face the facts – to date there is no market-ready solution for this interface. Instead, you must actively tell your device what you want it to do, using sweeping hand gestures or struggling with immature voice commands or impractical touch pads.

THE ALTERNATIVES?

At worst, an unfiltered information overkill, a mixed reality hell such as the artist Keiichi Matsuda outlines in the futuristic video „Hyper Reality„, certainly in a particularly dramatic form.

At best, an interface that feels like no interface. Where your device understands your surroundings and what you want to do, without your explicit input, showing you exactly the information you need in the right moment.

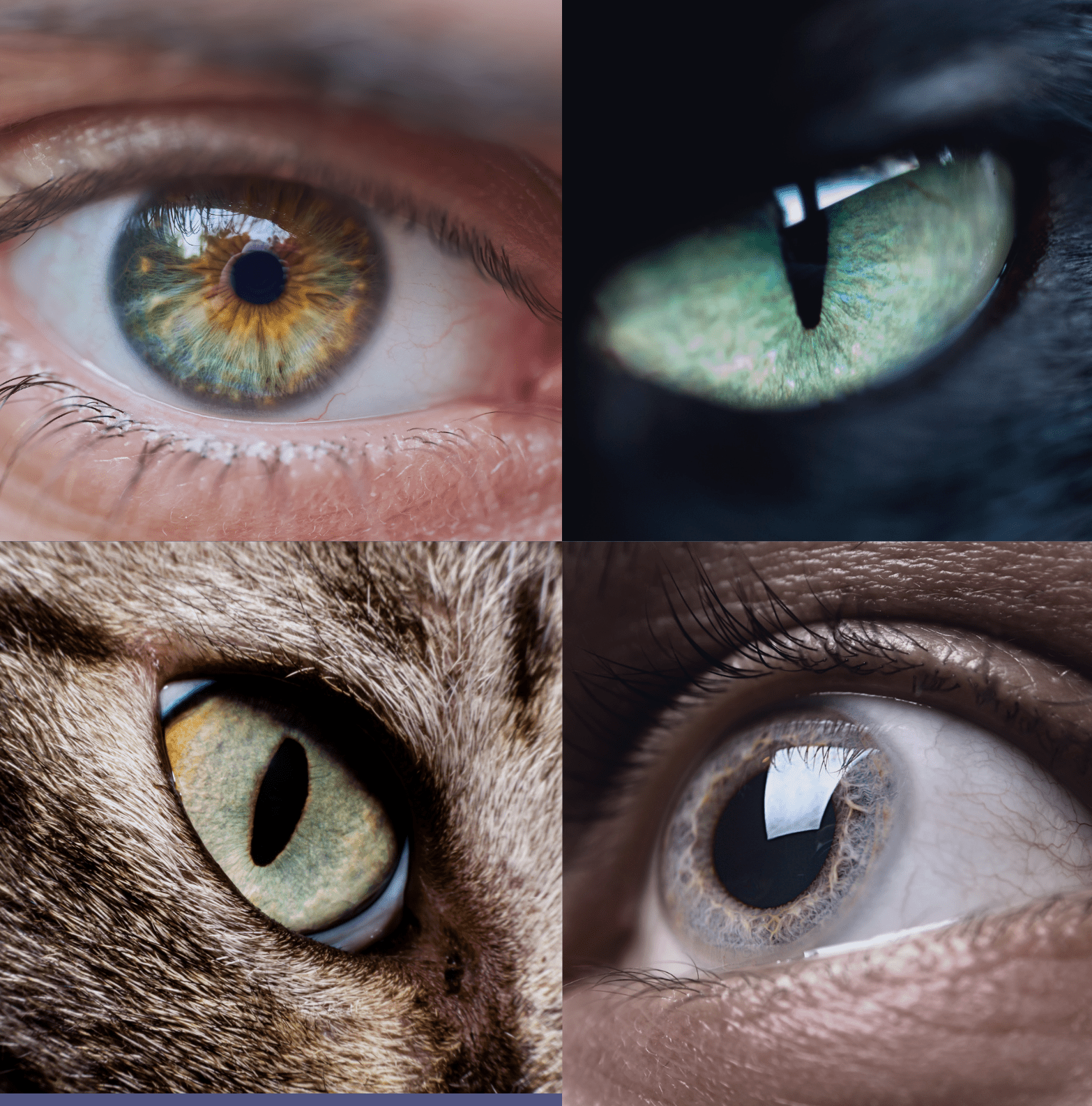

At Viewpointsystem we are convinced that we have found a decisive key to this digital immersion – the Digital Iris technology. Combining Eye Hyper-Tracking and Mixed Reality, it can detect our need for information via the interface of the eyes. What do we focus our gaze on, which objects in our surroundings are we currently interested in? We do recognize it by the eyes’ movements. By means of the pupil dilations we can detect whether the user reacts emotionally in a certain situation, for example, whether he is excited or stressed. Thus, Digital Iris can provide the right information in front of the eye in exactly the right moment. In the near future, you will no longer have to tell your machine what you want – it already knows.

There is a metaphor we like to use: The Digital Iris can read you like a loving partner. When you want to snack some cheese in front of the TV, your partner can tell by your look: they will offer you some Camembert or some Tilsiter, knowing before you knew, just from looking at you and being familiar with you.

Over the next years, we will bring Digital Iris to perfection, adding a large number of components to the system. Your device will be able to make sense of the subconscious eye-movements and other biometric information of people in general but can also learn to recognize your very own personal reactions. It will use the gathered information to generate predictions of individual behavior. By linking information with biometric data and machine learning, the system knows how you tend to react, what interests you and what your current state is in the respective situational context, both rationally and emotionally,

An example: I’m in a foreign city on my way to an important meeting. It is hot and I cannot find my way around big cities very well. My smart glasses recognize my flickering gaze and notice how my pulse is rising. They also know that I only have a four-minute walk, but still 15 minutes available. Hence the suggestion, ‚Take off your jacket and walk slowly to the meeting‘. I avoid a stressful situation because my system advises me, based on my personal parameters and indicators.

The scenarios go on. The eye itself becomes the interface. The mind becomes the selector of what is important to me. And that is the future of mixed reality.